-

Convolutional Neural Networks - 3 week 실습Google ML Bootcamp 2022/Coursera mission 2022. 7. 31. 20:57

뭔가 요새 재미난 과제가 많아서 실습 글을 많이 적는다

신난다 신나

1. Car detection with YOLO

2. Image Segmentation with U-NetCar detection with YOLO

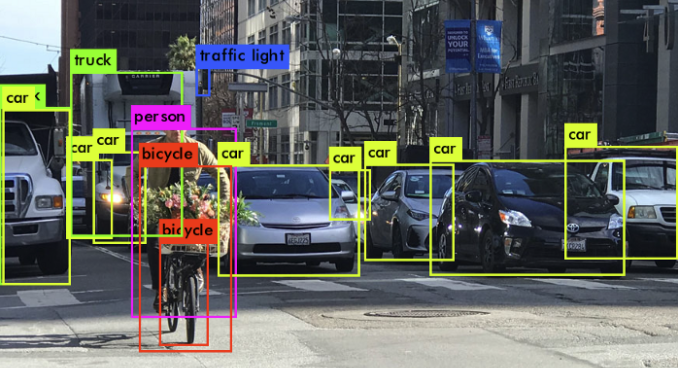

어디서 많이 본 것 같다 위의 bounding box를 그리는 YOLO 모델을 만들어보자

YOLO에 대한 설명은 생략하고, 바로 기능 별로 함수를 만들어 보자

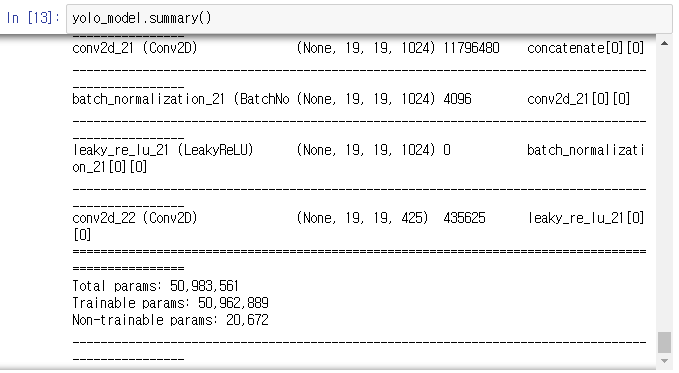

텐서를 보면 알 수 있듯이, 5개의 앵커 박스를 가지며 19 x 19 그리드로 처리했다

먼저 박스에 필터를 씌우는 함수이다

max인 클래스를 찾고, threshold를 넘지 않으면 값을 날린다

def yolo_filter_boxes(boxes, box_confidence, box_class_probs, threshold = .6): """Filters YOLO boxes by thresholding on object and class confidence. Arguments: boxes -- tensor of shape (19, 19, 5, 4) box_confidence -- tensor of shape (19, 19, 5, 1) box_class_probs -- tensor of shape (19, 19, 5, 80) threshold -- real value, if [ highest class probability score < threshold], then get rid of the corresponding box Returns: scores -- tensor of shape (None,), containing the class probability score for selected boxes boxes -- tensor of shape (None, 4), containing (b_x, b_y, b_h, b_w) coordinates of selected boxes classes -- tensor of shape (None,), containing the index of the class detected by the selected boxes Note: "None" is here because you don't know the exact number of selected boxes, as it depends on the threshold. For example, the actual output size of scores would be (10,) if there are 10 boxes. """ # Step 1: Compute box scores box_scores = box_confidence * box_class_probs # Step 2: Find the box_classes using the max box_scores, keep track of the corresponding score # IMPORTANT: set axis to -1 (각 anchor에서 찾아야하므로) box_classes = tf.math.argmax(box_scores,-1) box_class_scores = tf.math.reduce_max(box_scores,-1) # Step 3: Create a filtering mask based on "box_class_scores" by using "threshold". The mask should have the # same dimension as box_class_scores, and be True for the boxes you want to keep (with probability >= threshold) filtering_mask = box_class_scores >=threshold # Step 4: Apply the mask to box_class_scores, boxes and box_classes scores = tf.boolean_mask(box_class_scores, filtering_mask) boxes = tf.boolean_mask(boxes, filtering_mask) classes = tf.boolean_mask(box_classes, filtering_mask) return scores, boxes, classes그리곤 non-max suppression이다

iou부터 구현해 보자

def iou(box1, box2): """Implement the intersection over union (IoU) between box1 and box2 Arguments: box1 -- first box, list object with coordinates (box1_x1, box1_y1, box1_x2, box_1_y2) box2 -- second box, list object with coordinates (box2_x1, box2_y1, box2_x2, box2_y2) """ (box1_x1, box1_y1, box1_x2, box1_y2) = box1 (box2_x1, box2_y1, box2_x2, box2_y2) = box2 # Calculate the (yi1, xi1, yi2, xi2) coordinates of the intersection of box1 and box2. Calculate its Area. xi1 = max(box1_x1,box2_x1) yi1 = max(box1_y1,box2_y1) xi2 = min(box1_x2,box2_x2) yi2 = min(box1_y2,box2_y2) inter_width = max(0,yi2 - yi1) inter_height = max(0,xi2 - xi1) inter_area = inter_width * inter_height # Calculate the Union area by using Formula: Union(A,B) = A + B - Inter(A,B) box1_area = (box1_x2-box1_x1)*(box1_y2-box1_y1) box2_area = (box2_x2-box2_x1)*(box2_y2-box2_y1) union_area = box1_area + box2_area - inter_area # compute the IoU iou = inter_area/union_area return iou그냥 박스 2개가 주어지면 잘 계산하면 된다

그런데 사실 tf에선 tf.image.non_max_suppression함수를 제공하기에 구현할 필요가 없다

아래와 같이 non_max_suppression 기능을 구현한다

tf 함수들이 좀 낯설었는데, 나중에 공부하자

def yolo_non_max_suppression(scores, boxes, classes, max_boxes = 10, iou_threshold = 0.5): """ Applies Non-max suppression (NMS) to set of boxes Arguments: scores -- tensor of shape (None,), output of yolo_filter_boxes() boxes -- tensor of shape (None, 4), output of yolo_filter_boxes() that have been scaled to the image size (see later) classes -- tensor of shape (None,), output of yolo_filter_boxes() max_boxes -- integer, maximum number of predicted boxes you'd like iou_threshold -- real value, "intersection over union" threshold used for NMS filtering Returns: scores -- tensor of shape (None, ), predicted score for each box boxes -- tensor of shape (None, 4), predicted box coordinates classes -- tensor of shape (None, ), predicted class for each box Note: The "None" dimension of the output tensors has obviously to be less than max_boxes. Note also that this function will transpose the shapes of scores, boxes, classes. This is made for convenience. """ max_boxes_tensor = tf.Variable(max_boxes, dtype='int32') # tensor to be used in tf.image.non_max_suppression() # Use tf.image.non_max_suppression() to get the list of indices corresponding to boxes you keep nms_indices = tf.image.non_max_suppression(boxes, scores, max_boxes_tensor, iou_threshold) # Use tf.gather() to select only nms_indices from scores, boxes and classes scores = tf.gather(scores, nms_indices) boxes = tf.gather(boxes, nms_indices) classes = tf.gather(classes, nms_indices) return scores, boxes, classesYOLO가 예측한 박스의 형태를 조정해 주는 그냥 함수다

그런가 보다 하자

def yolo_boxes_to_corners(box_xy, box_wh): """Convert YOLO box predictions to bounding box corners.""" box_mins = box_xy - (box_wh / 2.) box_maxes = box_xy + (box_wh / 2.) return tf.keras.backend.concatenate([ box_mins[..., 1:2], # y_min box_mins[..., 0:1], # x_min box_maxes[..., 1:2], # y_max box_maxes[..., 0:1] # x_max ])자 지금까지 구현 한 내용을 합쳐보자

conv가 계산한 내용을 모아서 yolo로 처리해 주는 함수이다

# UNQ_C4 (UNIQUE CELL IDENTIFIER, DO NOT EDIT) # GRADED FUNCTION: yolo_eval def yolo_eval(yolo_outputs, image_shape = (720, 1280), max_boxes=10, score_threshold=.6, iou_threshold=.5): """ Converts the output of YOLO encoding (a lot of boxes) to your predicted boxes along with their scores, box coordinates and classes. Arguments: yolo_outputs -- output of the encoding model (for image_shape of (608, 608, 3)), contains 4 tensors: box_xy: tensor of shape (None, 19, 19, 5, 2) box_wh: tensor of shape (None, 19, 19, 5, 2) box_confidence: tensor of shape (None, 19, 19, 5, 1) box_class_probs: tensor of shape (None, 19, 19, 5, 80) image_shape -- tensor of shape (2,) containing the input shape, in this notebook we use (608., 608.) (has to be float32 dtype) max_boxes -- integer, maximum number of predicted boxes you'd like score_threshold -- real value, if [ highest class probability score < threshold], then get rid of the corresponding box iou_threshold -- real value, "intersection over union" threshold used for NMS filtering Returns: scores -- tensor of shape (None, ), predicted score for each box boxes -- tensor of shape (None, 4), predicted box coordinates classes -- tensor of shape (None,), predicted class for each box """ # Retrieve outputs of the YOLO model box_xy, box_wh, box_confidence, box_class_probs = yolo_outputs # Convert boxes to be ready for filtering functions (convert boxes box_xy and box_wh to corner coordinates) boxes = yolo_boxes_to_corners(box_xy, box_wh) # Use one of the functions you've implemented to perform Score-filtering with a threshold of score_threshold scores, boxes, classes = yolo_filter_boxes(boxes, box_confidence, box_class_probs, score_threshold) # Scale boxes back to original image shape. boxes = scale_boxes(boxes, image_shape) # Use one of the functions you've implemented to perform Non-max suppression with # maximum number of boxes set to max_boxes and a threshold of iou_threshold scores, boxes, classes = yolo_non_max_suppression(scores, boxes, classes, max_boxes, iou_threshold) return scores, boxes, classes구현 끝

적용 시킨 모습을 보자

로드한 큼직한 모델, 변수만 5천만개 def predict(image_file): """ Runs the graph to predict boxes for "image_file". Prints and plots the predictions. Arguments: image_file -- name of an image stored in the "images" folder. Returns: out_scores -- tensor of shape (None, ), scores of the predicted boxes out_boxes -- tensor of shape (None, 4), coordinates of the predicted boxes out_classes -- tensor of shape (None, ), class index of the predicted boxes Note: "None" actually represents the number of predicted boxes, it varies between 0 and max_boxes. """ # Preprocess your image image, image_data = preprocess_image("images/" + image_file, model_image_size = (608, 608)) yolo_model_outputs = yolo_model(image_data) yolo_outputs = yolo_head(yolo_model_outputs, anchors, len(class_names)) out_scores, out_boxes, out_classes = yolo_eval(yolo_outputs, [image.size[1], image.size[0]], 10, 0.3, 0.5) # Print predictions info print('Found {} boxes for {}'.format(len(out_boxes), "images/" + image_file)) # Generate colors for drawing bounding boxes. colors = get_colors_for_classes(len(class_names)) # Draw bounding boxes on the image file #draw_boxes2(image, out_scores, out_boxes, out_classes, class_names, colors, image_shape) draw_boxes(image, out_boxes, out_classes, class_names, out_scores) # Save the predicted bounding box on the image image.save(os.path.join("out", image_file), quality=100) # Display the results in the notebook output_image = Image.open(os.path.join("out", image_file)) imshow(output_image) return out_scores, out_boxes, out_classes예측 함수를 잘 만들어 돌려보면...

잘 작동한다

Image Segmentation with U-Net

다음으로 픽셀을 색칠하는 U-Net을 구현해 보자

데이터 셋 자체도 마스킹한 것이 필요하다

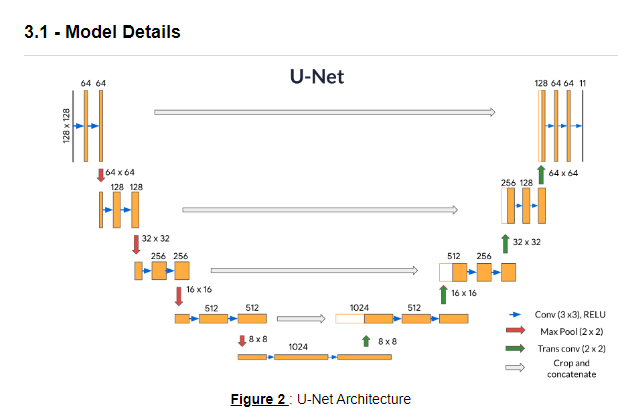

구현한 모델 전체는 아래와 같이 생겼다

너무 예뻐라... 반반 나눠서 천천히 Encoder, Decoder의 하나의 블록을 구현해 보자

위로 올라가는 Decoder에서 필요한 skip layer을 저장해야 한다

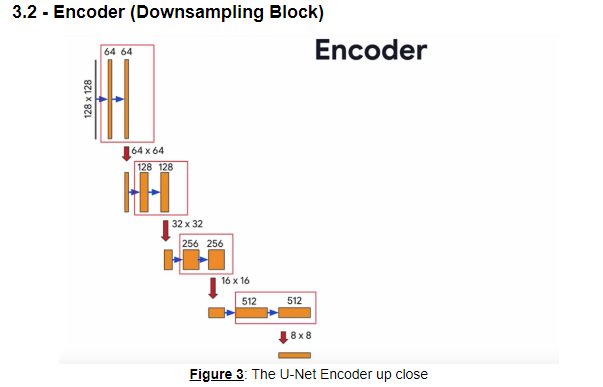

필터의 수가 2배가 되는 것이 눈에 띈다 (이건 모델에서 건들자)

그냥 보이는대로 conv 구현하고, 추가적으로 dropout과 pooling 기능을 추가했다

def conv_block(inputs=None, n_filters=32, dropout_prob=0, max_pooling=True): """ Convolutional downsampling block Arguments: inputs -- Input tensor n_filters -- Number of filters for the convolutional layers dropout_prob -- Dropout probability max_pooling -- Use MaxPooling2D to reduce the spatial dimensions of the output volume Returns: next_layer, skip_connection -- Next layer and skip connection outputs """ conv = Conv2D(n_filters, # Number of filters 3, # Kernel size activation='relu', padding='same', kernel_initializer='he_normal')(inputs) conv = Conv2D(n_filters, # Number of filters 3, # Kernel size activation='relu', padding='same', kernel_initializer='he_normal')(conv) # if dropout_prob > 0 add a dropout layer, with the variable dropout_prob as parameter if dropout_prob > 0: conv = Dropout(dropout_prob)(conv) # if max_pooling is True add a MaxPooling2D with 2x2 pool_size if max_pooling: next_layer = MaxPooling2D(2,strides=2)(conv) else: next_layer = conv skip_connection = conv return next_layer, skip_connection다음으로 Decoder 과정이다

크게 다를 게 없지만, skiplayer를 merge 하는 과정을 구현해 줘야 한다

def upsampling_block(expansive_input, contractive_input, n_filters=32): """ Convolutional upsampling block Arguments: expansive_input -- Input tensor from previous layer contractive_input -- Input tensor from previous skip layer n_filters -- Number of filters for the convolutional layers Returns: conv -- Tensor output """ up = Conv2DTranspose( n_filters, # number of filters 3, # Kernel size strides=2, padding='same')(expansive_input) # Merge the previous output and the contractive_input merge = concatenate([up, contractive_input], axis=3) conv = Conv2D(n_filters, # Number of filters 3, # Kernel size activation='relu', padding='same', kernel_initializer='he_normal')(merge) conv = Conv2D(n_filters, # Number of filters 3, # Kernel size activation='relu', padding='same', kernel_initializer='he_normal')(conv) return conv자자 블록을 모두 만들었으니, 합쳐서 모델을 만들자 (사실 그냥 그림대로 조합하면 된다)

최종 과정에서 n_classes만큼 채널을 조정하기 위해 1 x 1 conv를 사용하였다

def unet_model(input_size=(96, 128, 3), n_filters=32, n_classes=23): """ Unet model Arguments: input_size -- Input shape n_filters -- Number of filters for the convolutional layers n_classes -- Number of output classes Returns: model -- tf.keras.Model """ inputs = Input(input_size) # Contracting Path (encoding) # Add a conv_block with the inputs of the unet_ model and n_filters cblock1 = conv_block(inputs, n_filters) # Chain the first element of the output of each block to be the input of the next conv_block. # Double the number of filters at each new step cblock2 = conv_block(cblock1[0], n_filters * 2) cblock3 = conv_block(cblock2[0], n_filters * 4) cblock4 = conv_block(cblock3[0], n_filters * 8, dropout_prob=0.3) # Include a dropout_prob of 0.3 for this layer # Include a dropout_prob of 0.3 for this layer, and avoid the max_pooling layer cblock5 = conv_block(cblock4[0], n_filters * 16, dropout_prob=0.3, max_pooling=False) # Expanding Path (decoding) # Add the first upsampling_block. # Use the cblock5[0] as expansive_input and cblock4[1] as contractive_input and n_filters * 8 ### START CODE HERE ublock6 = upsampling_block(cblock5[0], cblock4[1], n_filters * 8) # Chain the output of the previous block as expansive_input and the corresponding contractive block output. # Note that you must use the second element of the contractive block i.e before the maxpooling layer. # At each step, use half the number of filters of the previous block ublock7 = upsampling_block(ublock6, cblock3[1], n_filters * 4) ublock8 = upsampling_block(ublock7, cblock2[1], n_filters * 2) ublock9 = upsampling_block(ublock8, cblock1[1], n_filters) conv9 = Conv2D(n_filters, 3, activation='relu', padding='same', kernel_initializer='he_normal')(ublock9) # Add a Conv2D layer with n_classes filter, kernel size of 1 and a 'same' padding conv10 = Conv2D(n_classes, 1, padding='same')(conv9) model = tf.keras.Model(inputs=inputs, outputs=conv10) return model모델을 만들고, Loss를 compile 해준다

바로 결과를 보자

17번쯤 에포크에서 정확도가 큰 폭으로 감소하는 구간이 있는데 왜 그런지 모르겠다

결과 중 일부 잘 작동한다

'Google ML Bootcamp 2022 > Coursera mission' 카테고리의 다른 글

Sequence Models - 1 week (0) 2022.08.07 Convolutional Neural Networks - 4 week (0) 2022.08.02 Convolutional Neural Networks - 3 week (0) 2022.07.31 Convolutional Neural Networks - 2 week 실습 (0) 2022.07.29 Convolutional Neural Networks - 2 week (2) 2022.07.27